On this page you can find normalized iris data set that was used in Iris Plant Classification Using Neural Network – Online Experiments with Normalization and Other Parameters. The data set is divided to training data set (141 records) and testing data set (9 records, 3 for each class). Class label is shown separately.

To calculate normalized data, the below table was built.

min 4.3 2 1 0.1

max 7.9 4.4 6.9 2.5

max-min 3.6 2.4 5.9 2.4

Here min, max and min-max are taken over the columns of iris data set which are:

1. sepal length in cm

2. sepal width in cm

3. petal length in cm

4. petal width in cm

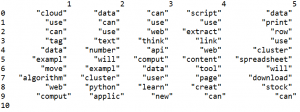

Training data set:

0.083333333 0.458333333 0.084745763 0.041666667

0.194444444 0.666666667 0.06779661 0.041666667

0.305555556 0.791666667 0.118644068 0.125

0.083333333 0.583333333 0.06779661 0.083333333

0.194444444 0.583333333 0.084745763 0.041666667

0.027777778 0.375 0.06779661 0.041666667

0.166666667 0.458333333 0.084745763 0

0.305555556 0.708333333 0.084745763 0.041666667

0.138888889 0.583333333 0.101694915 0.041666667

0.138888889 0.416666667 0.06779661 0

0 0.416666667 0.016949153 0

0.416666667 0.833333333 0.033898305 0.041666667

0.388888889 1 0.084745763 0.125

0.305555556 0.791666667 0.050847458 0.125

0.222222222 0.625 0.06779661 0.083333333

0.388888889 0.75 0.118644068 0.083333333

0.222222222 0.75 0.084745763 0.083333333

0.305555556 0.583333333 0.118644068 0.041666667

0.222222222 0.708333333 0.084745763 0.125

0.083333333 0.666666667 0 0.041666667

0.222222222 0.541666667 0.118644068 0.166666667

0.138888889 0.583333333 0.152542373 0.041666667

0.194444444 0.416666667 0.101694915 0.041666667

0.194444444 0.583333333 0.101694915 0.125

0.25 0.625 0.084745763 0.041666667

0.25 0.583333333 0.06779661 0.041666667

0.111111111 0.5 0.101694915 0.041666667

0.138888889 0.458333333 0.101694915 0.041666667

0.305555556 0.583333333 0.084745763 0.125

0.25 0.875 0.084745763 0

0.333333333 0.916666667 0.06779661 0.041666667

0.166666667 0.458333333 0.084745763 0

0.194444444 0.5 0.033898305 0.041666667

0.333333333 0.625 0.050847458 0.041666667

0.166666667 0.458333333 0.084745763 0

0.027777778 0.416666667 0.050847458 0.041666667

0.222222222 0.583333333 0.084745763 0.041666667

0.194444444 0.625 0.050847458 0.083333333

0.055555556 0.125 0.050847458 0.083333333

0.027777778 0.5 0.050847458 0.041666667

0.194444444 0.625 0.101694915 0.208333333

0.222222222 0.75 0.152542373 0.125

0.138888889 0.416666667 0.06779661 0.083333333

0.222222222 0.75 0.101694915 0.041666667

0.083333333 0.5 0.06779661 0.041666667

0.277777778 0.708333333 0.084745763 0.041666667

0.194444444 0.541666667 0.06779661 0.041666667

0.333333333 0.125 0.508474576 0.5

0.611111111 0.333333333 0.610169492 0.583333333

0.388888889 0.333333333 0.593220339 0.5

0.555555556 0.541666667 0.627118644 0.625

0.166666667 0.166666667 0.389830508 0.375

0.638888889 0.375 0.610169492 0.5

0.25 0.291666667 0.491525424 0.541666667

0.194444444 0 0.423728814 0.375

0.444444444 0.416666667 0.542372881 0.583333333

0.472222222 0.083333333 0.508474576 0.375

0.5 0.375 0.627118644 0.541666667

0.361111111 0.375 0.440677966 0.5

0.666666667 0.458333333 0.576271186 0.541666667

0.361111111 0.416666667 0.593220339 0.583333333

0.416666667 0.291666667 0.525423729 0.375

0.527777778 0.083333333 0.593220339 0.583333333

0.361111111 0.208333333 0.491525424 0.416666667

0.444444444 0.5 0.644067797 0.708333333

0.5 0.333333333 0.508474576 0.5

0.555555556 0.208333333 0.661016949 0.583333333

0.5 0.333333333 0.627118644 0.458333333

0.583333333 0.375 0.559322034 0.5

0.638888889 0.416666667 0.576271186 0.541666667

0.694444444 0.333333333 0.644067797 0.541666667

0.666666667 0.416666667 0.677966102 0.666666667

0.472222222 0.375 0.593220339 0.583333333

0.388888889 0.25 0.423728814 0.375

0.333333333 0.166666667 0.474576271 0.416666667

0.333333333 0.166666667 0.457627119 0.375

0.416666667 0.291666667 0.491525424 0.458333333

0.472222222 0.291666667 0.694915254 0.625

0.305555556 0.416666667 0.593220339 0.583333333

0.472222222 0.583333333 0.593220339 0.625

0.666666667 0.458333333 0.627118644 0.583333333

0.555555556 0.125 0.576271186 0.5

0.361111111 0.416666667 0.525423729 0.5

0.333333333 0.208333333 0.508474576 0.5

0.333333333 0.25 0.576271186 0.458333333

0.5 0.416666667 0.610169492 0.541666667

0.416666667 0.25 0.508474576 0.458333333

0.194444444 0.125 0.389830508 0.375

0.361111111 0.291666667 0.542372881 0.5

0.388888889 0.416666667 0.542372881 0.458333333

0.388888889 0.375 0.542372881 0.5

0.527777778 0.375 0.559322034 0.5

0.222222222 0.208333333 0.338983051 0.416666667

0.388888889 0.333333333 0.525423729 0.5

0.555555556 0.541666667 0.847457627 1

0.416666667 0.291666667 0.694915254 0.75

0.777777778 0.416666667 0.830508475 0.833333333

0.555555556 0.375 0.779661017 0.708333333

0.611111111 0.416666667 0.813559322 0.875

0.916666667 0.416666667 0.949152542 0.833333333

0.166666667 0.208333333 0.593220339 0.666666667

0.833333333 0.375 0.898305085 0.708333333

0.666666667 0.208333333 0.813559322 0.708333333

0.805555556 0.666666667 0.86440678 1

0.611111111 0.5 0.694915254 0.791666667

0.583333333 0.291666667 0.728813559 0.75

0.694444444 0.416666667 0.762711864 0.833333333

0.388888889 0.208333333 0.677966102 0.791666667

0.416666667 0.333333333 0.694915254 0.958333333

0.583333333 0.5 0.728813559 0.916666667

0.611111111 0.416666667 0.762711864 0.708333333

0.944444444 0.75 0.966101695 0.875

0.944444444 0.25 1 0.916666667

0.472222222 0.083333333 0.677966102 0.583333333

0.722222222 0.5 0.796610169 0.916666667

0.361111111 0.333333333 0.661016949 0.791666667

0.944444444 0.333333333 0.966101695 0.791666667

0.555555556 0.291666667 0.661016949 0.708333333

0.666666667 0.541666667 0.796610169 0.833333333

0.805555556 0.5 0.847457627 0.708333333

0.527777778 0.333333333 0.644067797 0.708333333

0.5 0.416666667 0.661016949 0.708333333

0.583333333 0.333333333 0.779661017 0.833333333

0.805555556 0.416666667 0.813559322 0.625

0.861111111 0.333333333 0.86440678 0.75

1 0.75 0.915254237 0.791666667

0.583333333 0.333333333 0.779661017 0.875

0.555555556 0.333333333 0.694915254 0.583333333

0.5 0.25 0.779661017 0.541666667

0.944444444 0.416666667 0.86440678 0.916666667

0.555555556 0.583333333 0.779661017 0.958333333

0.583333333 0.458333333 0.762711864 0.708333333

0.472222222 0.416666667 0.644067797 0.708333333

0.722222222 0.458333333 0.745762712 0.833333333

0.666666667 0.458333333 0.779661017 0.958333333

0.722222222 0.458333333 0.694915254 0.916666667

0.416666667 0.291666667 0.694915254 0.75

0.694444444 0.5 0.830508475 0.916666667

0.666666667 0.541666667 0.796610169 1

0.666666667 0.416666667 0.711864407 0.916666667

0.555555556 0.208333333 0.677966102 0.75

Testing data set

0.222222222 0.625 0.06779661 0.041666667

0.166666667 0.416666667 0.06779661 0.041666667

0.111111111 0.5 0.050847458 0.041666667

0.75 0.5 0.627118644 0.541666667

0.583333333 0.5 0.593220339 0.583333333

0.722222222 0.458333333 0.661016949 0.583333333

0.444444444 0.416666667 0.694915254 0.708333333

0.611111111 0.416666667 0.711864407 0.791666667

0.527777778 0.583333333 0.745762712 0.916666667

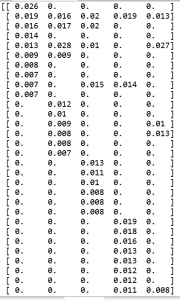

Training data set – class label values

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

0.5

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

Testing data set – class label values

0

0

0

0.5

0.5

0.5

1

1

1

You must be logged in to post a comment.